A scientific team formed by the Pompeu Fabra University and the Optics Institute has developed an algorithm capable of transferring the style of a realistic image to a given video so that it adopts the tone, color palette and contrast of the given image.

The algorithm allows the raw video output of a camera to be processed in real time so that the resulting video can be viewed as it is recorded. In addition, the algorithm allows the user to mark correspondence in regions of interest of the image and the video.

The resulting videos are artifact-free and provide an excellent approximation to the desired look, resulting in savings in pre-production, shooting, and post-production time. The method outperforms several current state-of-the-art systems based on volunteer experiments.

Color in the cinema

Color plays a fundamental role in cinema, since it greatly affects the way we perceive the film and the characters. Color can create harmony or tension within a scene, or draw attention to a key theme. If chosen carefully, a well-placed film color palette evokes a mood and sets the tone for the film.

Ideally, the desired color appearance would be conceived in advance, then implemented in the recording, and carefully enhanced and rendered by the colorist during post-production in a stage called color grading. This entire process is very costly in terms of budget and time and can require a significant amount of work by highly trained artists and technicians.

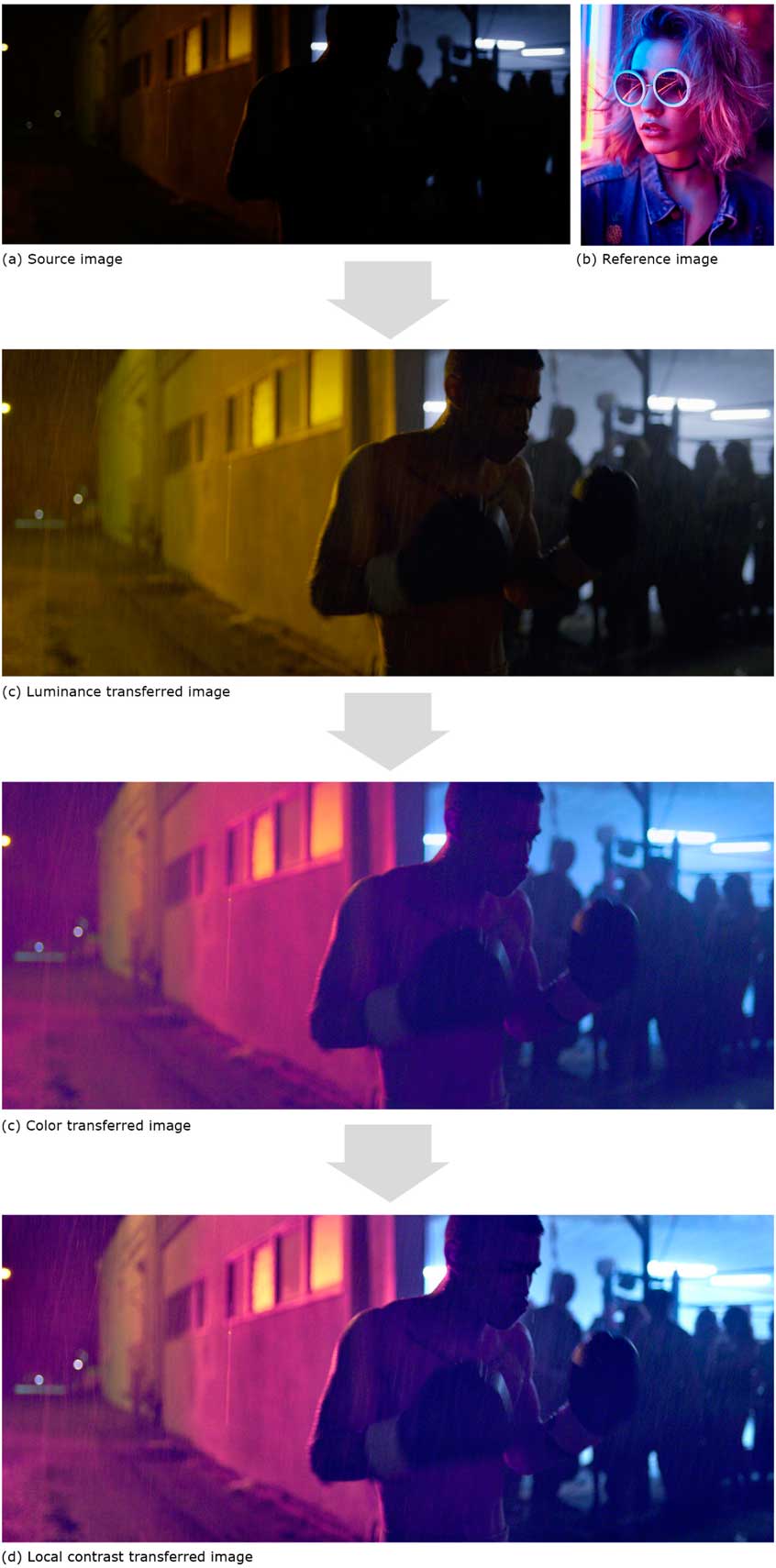

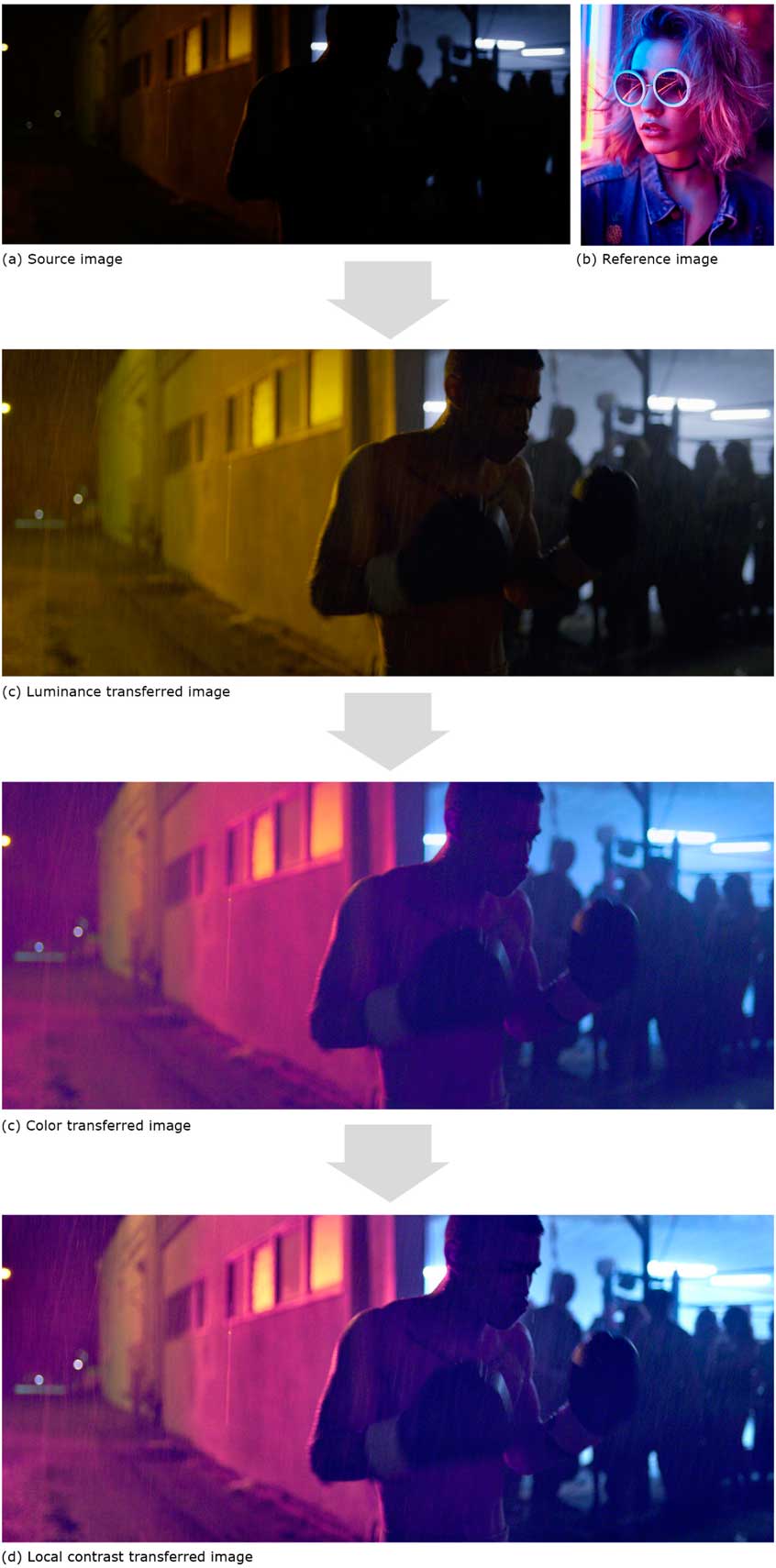

In this paper we propose a method that can alleviate the workload of filmmakers and colorists during filming and post-production. In many cases, the director wants to emulate the style of a reference image, for example a still image from an existing film, a photograph, or even a sequence previously taken on the current film.

Given this reference image, our system automatically transfers the style, in terms of tone, color palette, and contrast. The algorithm is applied directly to the raw images and produces a display-ready result that matches the style of the reference image. It is a low computational cost process that can be implemented in-camera, so lighting and scene elements can be adjusted on set while viewing the resulting image on screen. While the method is intended as a replacement for some of the post-production tasks, it supports further refinements, both on set and post-production.

Video Style Transfer

Extending an image-based method to video sequences is not trivial, applying a separate style transfer to each frame of a video sequence can result in strong texture flickering and temporal inconsistency. It is also computationally expensive to calculate the parameters of each frame without taking into account the common content between frames. To avoid that, in the

article we propose a simple but effective method designed to handle these limitations.

Results and experimental validation

We tested the results of style transfer on a wide range of reference sources and sequences, where the luminance and color gamut varied from one to another.

15 volunteers participated in these experiments, who have validated our results by comparing them with current still image style transfer methods. They showed that the proposed method surpasses the algorithms of the state of the art in the academic literature and is comparable to the best methods for still images in the industry.

As future work, we intend to incorporate a number of extensions into our method, such as allowing foreground / background separation and the use of keyframes, in order to overcome some of the limitations that have been observed.

Link to scientific article

This work is a collaboration of the

Department of Information and Communication Technologies of the Pompeu Fabra University and the Institute of Optics of the CSIC