Using Decoupled Features for Photorealistic Style Transfer

Madrid / October 10, 2023

A team of researchers from York University of Toronto and the Institute of CSIC Optics has developed a new automatic photorealistic style transfer method for images and videos. This method, based on vision science principles and a new mathematical formulation, shows significant improvements over current technologies in terms of visual quality and performance.

The proposed method has been designed to overcome the limitations of artificial intelligence methods, a technology that has not been implemented in this field because it often produces results with unacceptable visual artifacts.

Latest news

What are style transfers?

The “style” of an image or video sequence is difficult to define. This is partly due to the fact that any feature of an image can be relevant to the perceived style. Lighting, scene, subject, optics, camera or post-processing can impact how the viewer appreciates an image and the sensations it conveys.

Film colorists, whose job it is to beautify and perfect images in post-production after they have been shot, currently use manual adjustments to replicate the color and tone characteristics of reference footage to give them the desired look.

In many cases, the director wants to emulate the style of a reference image, for example, a still image from an existing film, a photograph, or even a sequence taken previously in the current film.

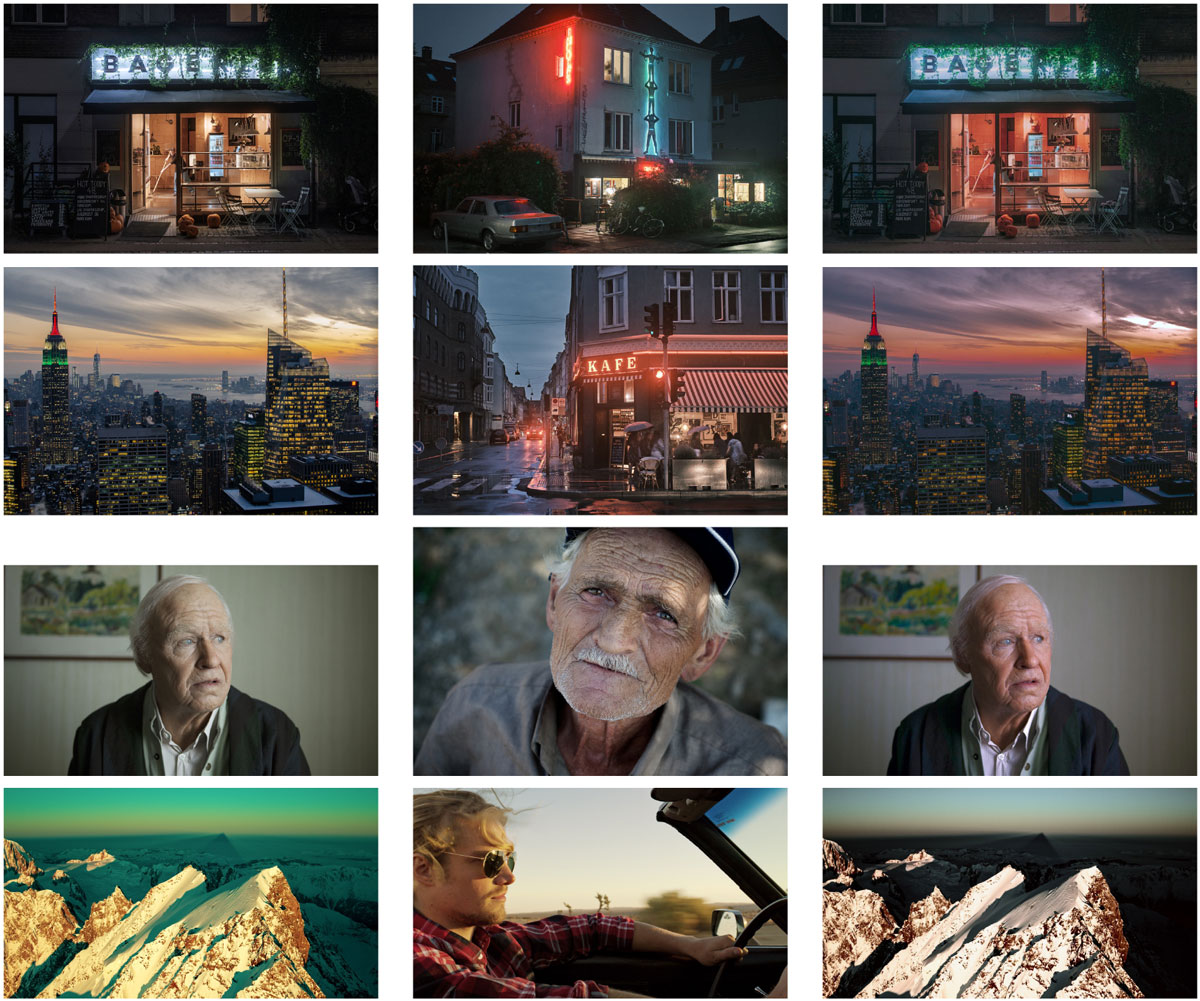

Given this reference image, the method proposed by Canham and colleagues manages to automatically transfer the style, in terms of tone, color palette and contrast. The algorithm modifies the image in real time and generates a result that matches the style of the reference image. Being a low computational cost process, the lighting and elements of the scene can be modified at the time of filming, while the resulting image is viewed on the screen.

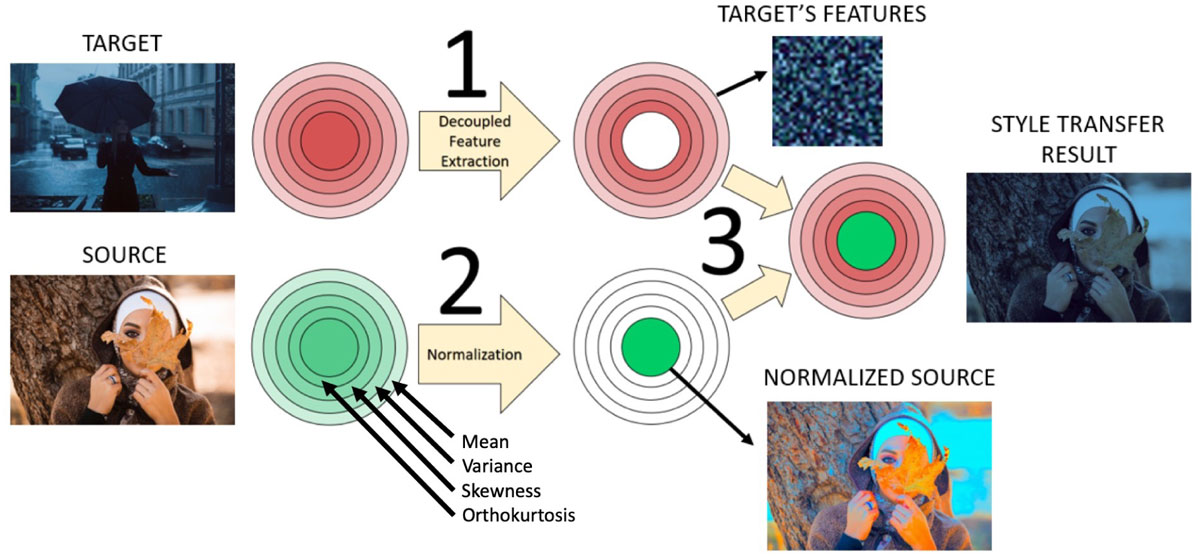

Part of the power of this new development is that by decoupling and extracting in isolation the most important characteristics of the style, such as brightness, contrast, shadow/light balance and black/light balance, the system allows you to modify each of them without altering the rest and without creating unnatural effects.

Applications

One of the proposed applications for this method is in the so-called homogenization of broadcast sources, where the image of remote participants captured on mobile phones and webcams can be integrated into the studio broadcast without it being noticed that the image is being recorded with another camera and with other lighting.

Additionally, the team has demonstrated how this method can be used to emulate expensive optical diffusion filters used in photography and cinematography, providing artists a tool to test and apply various optical effects for unique textures and smooth images in post-production at a fraction of the cost.

This advancement promises to improve the quality and efficiency of style transfer in a variety of applications, from real-time video editing to image post-production and cinematography.

Demo software.

The authors have published the software with a graphical environment and the MATLAB source code at the following link: https://github.com/trevorcanham/dCoupST.

With it, users will be able to test the style transfer with their own source and destination images

IO-CSIC Communication

cultura.io@io.cfmac.csic.es

Related news

The project “Harnessing Vision Science to Overcome the Critical Limitations of Artificial Neural Networks” has been one of the 5 projects selected in the Fundamentals Program of the BBVA Foundation

Madrid / February 19, 2024The call for the Fundamentals Program of the BBVA Foundation has been resolved with the granting of aid of 600,000 euros...

Rise and decline of spanish optical instrument technology: the telescope of the daza de valdés optics institute (1940-1970)

Madrid / January 12, 2024A recent study published in the journal "Asclepio: Revista de Historia de la Medicina y de la Ciencia" analyzes the rise...

Two photographs of María Egües Ortiz (1917-2008): a periscopic gaze in times of misty silence

Madrid / July 26, 2023This essay published by our colleague Sergio Barbero from the IVIS group focuses on the figure of María Egües Ortiz (...